Paul Dodds, Professor of Energy Systems, UCL

Earth’s surface temperature has been 1.5°C hotter than the pre-industrial average for 21 of the last 22 months.

The 2015 Paris agreement committed countries to keeping the global temperature increase “well below 2°C”, which is widely interpreted as an average of 1.5°C over a 30-year period. The Paris agreement has not yet failed, but recent high temperatures show how close the Earth is to crossing this critical threshold.

Climate scientists have, using computer simulations, modelled pathways for halting climate change at internationally agreed limits. However, in recent years, many of the pathways that have been published involve exceeding 1.5°C for a few decades and removing enough greenhouse gas from the atmosphere to return Earth’s average temperature below the threshold again. Scientists call this “a temporary overshoot”.

If human activities were to raise the global average temperature 1.6°C above the pre-industrial average, for example, then CO₂ removal, using methods ranging from habitat restoration to mechanically capturing CO₂ from the air, would be required to return warming to below 1.5°C by 2100.

Do we really understand the consequences of “temporarily” overshooting 1.5°C? And would it even be possible to lower temperatures again?Faith that a temporary overshoot will be safe and practicable has justified a deliberate strategy of delaying emission cuts in the short term, some scientists warn. The dangers posed by remaining above the 1.5°C limit for a period of time have received little attention by researchers like me, who study climate change.

To learn more, the UK government commissioned me and a team of 36 other scientists to examine the possible impacts.

How nature will be affected

We examined a “delayed action” scenario, in which greenhouse gas emissions remain similar for the next 15 years due to continued fossil fuel burning but then fall rapidly over a period of 20 years.

We projected that this would cause the rise in Earth’s temperature to peak at 1.9°C in 2060, before falling to 1.5°C in 2100 as greenhouse gases are removed from the atmosphere. We compared this scenario with a baseline scenario in which the global temperature does not exceed 1.5°C of warming this century.

Our Earth system model suggested that Arctic temperatures would be up to 4°C higher in 2060 compared to the baseline scenario. Arctic Sea ice loss would be much higher. Even after the global average temperature was returned to 1.5°C above pre-industrial levels, in 2100, the Arctic would remain around 1.5°C warmer compared to the baseline scenario. This suggests there are long-term and potentially irreversible consequences for the climate in overshooting 1.5°C.

As global warming approaches 2°C, warm-water corals, Arctic permafrost, Barents Sea ice and mountain glaciers could reach tipping points at which substantial and irreversible changes occur. Some scientists have concluded that the west Antarctic ice sheet may have already started melting irreversibly.

Our modelling showed that the risk of catastrophic wildfires is substantially higher during a temporary overshoot that culminates in 1.9°C of warming, particularly in regions already vulnerable to wildfires. Fires in California in early 2025 are an example of what is possible when the global temperature is higher.

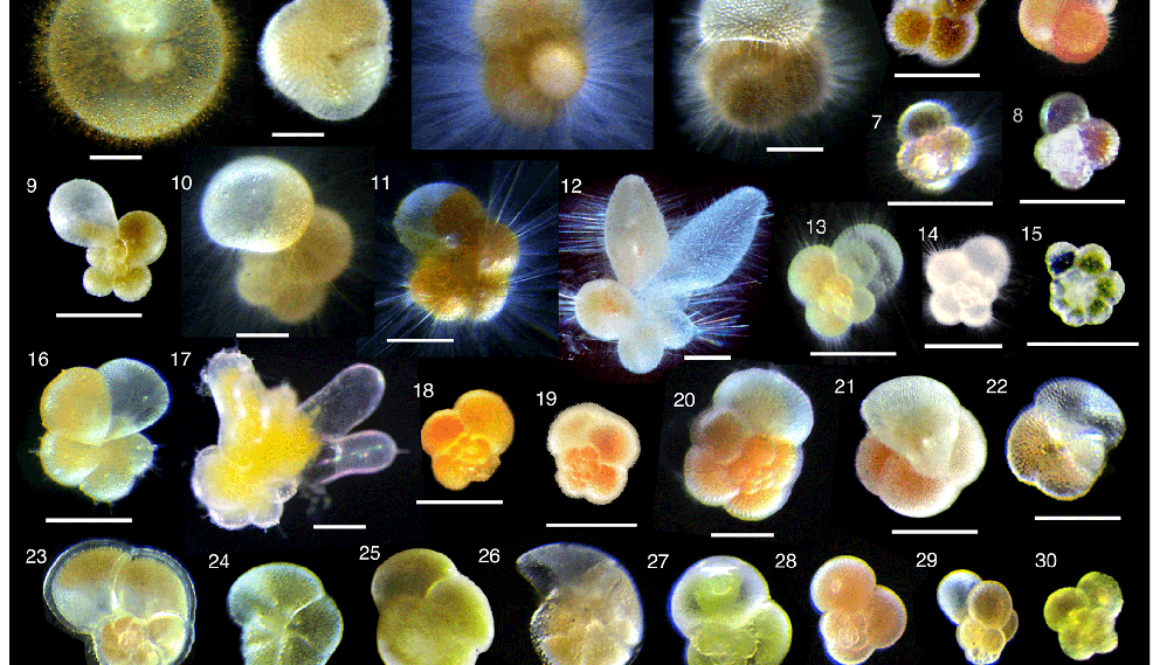

Our analysis showed that the risk of species going extinct at 2°C of warming is double that at 1.5°C. Insects are most at risk because they are less able to move between regions in response to the changing climate than larger mammals and birds.

The impacts on society

Only armed conflict is considered by experts to have a greater impact on society than extreme weather. Forecasting how extreme weather will be affected by climate change is challenging. Scientists expect more intense storms, floods and droughts, but not necessarily in places that already regularly suffer these extremes.

In some places, moderate floods may reduce in size while larger, more extreme events occur more often and cause more damage. We are confident that the sea level would rise faster in a temporary overshoot scenario, and further increase the risk of flooding. We also expect more extreme floods and droughts, and for them to cause more damage to water and sanitation systems.

Floods and droughts will affect food production too. We found that impact studies have probably underestimated the crop damage that increases in extreme weather and water scarcity in key production areas during a temporary overshoot would cause.

We know that heatwaves become more frequent and intense as temperatures increase. More scarce food and water would increase the health risks of heat exposure beyond 1.5°C. It is particularly difficult to estimate the overall impact of overshooting this temperature limit when several impacts reinforce each other in this way.

In fact, most alarming of all is how uncertain much of our knowledge is.

For example, we have little confidence in estimates of how climate change will affect the economy. Some academics use models to predict how crops and other economic assets will be affected by climate change; others infer what will happen by projecting real-word economic losses to date into future warming scenarios. For 3°C of warming, estimates of the annual impact on GDP using models range from -5% to +3% each year, but up to -55% using the latter approach.

We have not managed to reconcile the differences between these methods. The highest estimates account for changes in extreme weather due to climate change, which are particularly difficult to determine.

We carried out an economic analysis using estimates of climate damage from both models and observed climate-related losses. We found that temporarily overshooting 1.5°C would reduce global GDP compared with not overshooting it, even if economic damages were lower than we expect. The economic consequences for the global economy could be profound.

So, what can we say for certain? First, that temporarily overshooting 1.5°C would be more costly to society and to the natural world than not overshooting it. Second, our projections are relatively conservative. It is likely that impacts would be worse, and possibly much worse, than we estimate.

Fundamentally, every increment of global temperature rise will worsen impacts on us and the rest of the natural world. We should aim to minimise global warming as much as possible, rather than focus on a particular target.

Don’t have time to read about climate change as much as you’d like?

Get a weekly roundup in your inbox instead. Every Wednesday, The Conversation’s environment editor writes Imagine, a short email that goes a little deeper into just one climate issue. Join the 45,000+ readers who’ve subscribed so far.![]()

Paul Dodds, Professor of Energy Systems, UCL

This article is republished from The Conversation under a Creative Commons license. Read the original article.